GATE track 1 session: Difference between revisions

Jump to navigation

Jump to search

| Line 34: | Line 34: | ||

== Evaluation / Metrics == | == Evaluation / Metrics == | ||

* Evaluation metric - | * Evaluation metric - mathematically against human annotated | ||

* Scoring - performance measures for annotation types | * Scoring - performance measures for annotation types | ||

| Line 45: | Line 45: | ||

* Annotation set transfer (in tools) - transfer between docs in pipeline | * Annotation set transfer (in tools) - transfer between docs in pipeline | ||

** useful for eg | ** useful for eg HTML that has boilerplate | ||

=== To investigate === | === To investigate === | ||

Revision as of 23:37, 31 August 2010

A full week of learning GATE text mining/information extraction language processing and talks. Session wiki

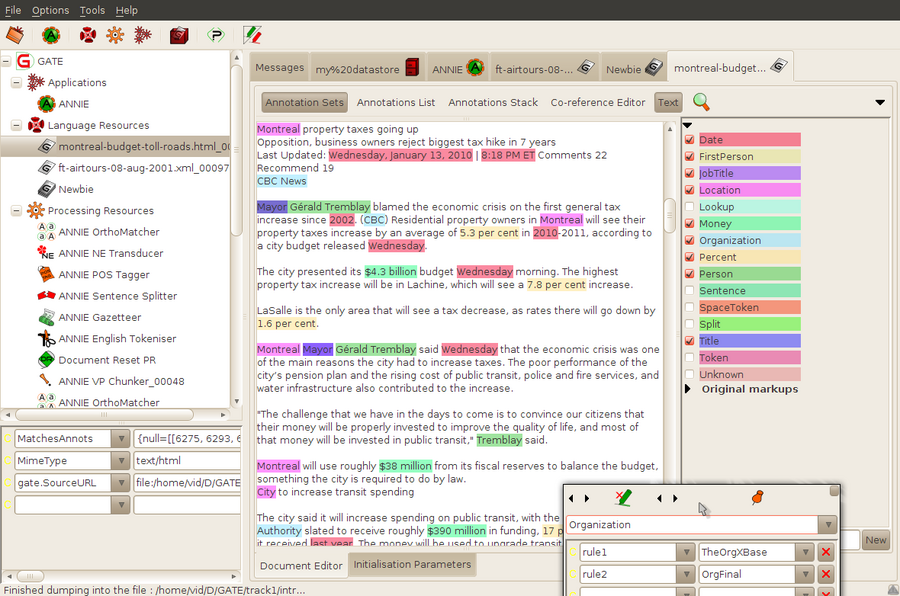

GATE is written in Java and very Java centric. This makes it portable, fast, and heavyweight. A programming library is available. It's 14 years old and has many users and contributors.

Using GATE developer

- GATE developer is used to process sets of Language Resources in Corpus using Processing Resources. They are typically saved to a serialized Datastore.

- ANNIE, VG (verb group) processors.

- Preserve formatting embeds tags in HTML or XML.

- Different strengths using GATE's graph (node/offset) based XML vs. preserved formatting (original xml/html)

Information Extraction

- IR - retrieve docs

- IE - retreive structured data

- Knowledge Engineering - rule based

- Learning Systems - statistical

Old Bailey IE project - old english (Online)

- POS - assigned in Token (noun, verb, etc)

- Gazateer - gotcha, have to set initialization parameter listsURL before it's

loaded. Must also "save and reinitialize."

- Gazeteer creates Lookups, then transducer creaties named entities

- Then orthomatcher (spelling features in common) coreference associates those

- Annotation Key sets and annotation comparing

- Need setToKeep key in Document Reset for any pre annotated texts

Evaluation / Metrics

- Evaluation metric - mathematically against human annotated

- Scoring - performance measures for annotation types

- Result types - Correct, missing, spurious, partially correct (overlapped)

- Tools > Annotations Diff - comparing human vs machine annotation

- Corpus > Corpus quality assurance - compare by type

- (B has to be the generated set)

- Annotation set transfer (in tools) - transfer between docs in pipeline

- useful for eg HTML that has boilerplate

To investigate

- markupAware for HTML/XML (keeps tags in editor)

- AnnotationStack

- Advanced Options