GATE track 1 session: Difference between revisions

(→JAPE) |

|||

| Line 62: | Line 62: | ||

* Rules based on tokens and lookups | * Rules based on tokens and lookups | ||

Phase: MatchingStyles | |||

Input: Lookup | |||

Options: control = first | |||

Rule: Test1 | |||

( | |||

({Lookup.majorType == location})? | |||

{Lookup.majorType == loc_key} | |||

):match | |||

--> | |||

:match.Location = {rule=Test1} | |||

== To review, gotchas == | == To review, gotchas == | ||

Revision as of 17:48, 3 September 2010

A full week of learning GATE text mining/information extraction language processing and talks. Session wiki

GATE is written in Java and very Java centric. This makes it portable, fast, and heavyweight. A programming library is available. It's 14 years old and has many users and contributors.

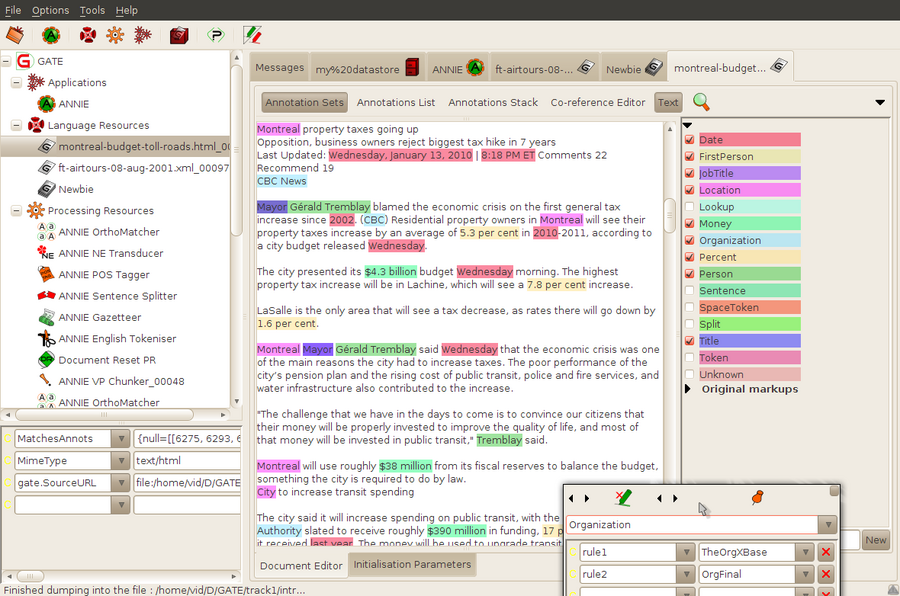

Using GATE developer

- GATE developer is used to process sets of Language Resources in Corpus using Processing Resources. They are typically saved to a serialized Datastore.

- ANNIE, VG (verb group) processors.

- Preserve formatting embeds tags in HTML or XML.

- Different strengths using GATE's graph (node/offset) based XML vs. preserved formatting (original xml/html)

Information Extraction

- IR - retrieve docs

- IE - retreive structured data

- Knowledge Engineering - rule based

- Learning Systems - statistical

Old Bailey IE project - old english (Online)

- POS - assigned in Token (noun, verb, etc)

- Gazateer - gotcha, have to set initialization parameter listsURL before it's loaded. Must also "save and reinitialize."

- Gazeteer creates Lookups, then transducer creaties named entities

- Then orthomatcher (spelling features in common) coreference associates those

- Annotation Key sets and annotation comparing

- Need setToKeep key in Document Reset for any pre annotated texts

Evaluation / Metrics

- Evaluation metric - mathematically against human annotated

- Scoring - performance measures for annotation types

- Precision = correct / correct + spurious

- Recall = correct / correct + missing

- F-measure is precision and recall (harmonic mean)

- F=2⋅(precision⋅recall / precision+recall)

- GATE supports average, strict, lenient

- Result types - Correct, missing, spurious, partially correct (overlapped)

- Tools > Annotations Diff - comparing human vs machine annotation

- Corpus > Corpus quality assurance - compare by type

- (B has to be the generated set)

- Annotation set transfer (in tools) - transfer between docs in pipeline

- useful for eg HTML that has boilerplate

To investigate

- markupAware for HTML/XML (keeps tags in editor)

- AnnotationStack

- Advanced Options

JAPE

- Rules based on tokens and lookups

Phase: MatchingStyles

Input: Lookup

Options: control = first

Rule: Test1

(

({Lookup.majorType == location})?

{Lookup.majorType == loc_key}

):match

-->

:match.Location = {rule=Test1}

To review, gotchas

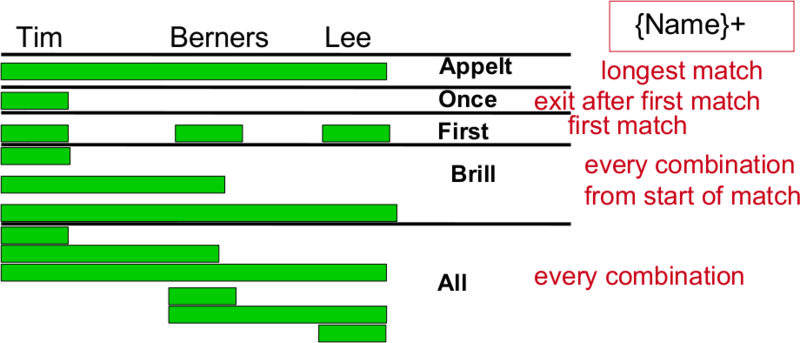

- Rule types : first takes only first match, excludes compound

- a? b for "a b" will match "a b"

- multiplexor tranducers

- multi-constraint statements

- macros

- To reuse created annotations has to be a separate rule

Matching types

To follow up

- WebSphinx crawler CREOLE plugin

Other notes

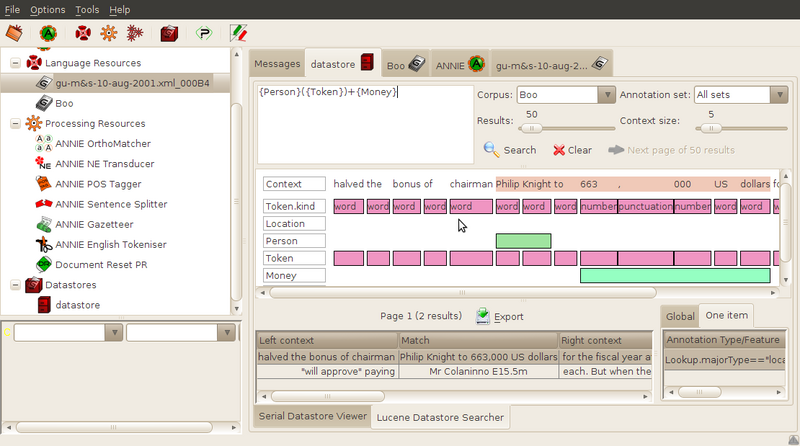

Lucene data store and ANIC

- Use <null> for default set

- Go to Datastore for queries

- eg {Person}({Token})+{Money}

- Useful for debugging JAPE and results

Demos

- Mímir for querying large volumes of data (uses MG4J)

- Translating parts of speech between languages using Compound editor and Alignment editor

- Predicate extractor (MultiPaX)

- Mixed results at best

- OwlExporter

- NLP ontology

Conclusions

While it can do a lot out of the box and benefits from development time and breadth of connectivity, to be useful to more than patient specialists, it needs usability testing. A lot of things are inobvious and too domain specific that with a bit of work could be more broadly useful. Interaction could include a lot more immediate, useful and interesting looking displays. A web based version could have these features. However the team seems somewhat ambivalent about development. :)

Looking forward to learning about programming using GATE libraries.